Every year Verizon puts out the Data Breach Incident Report (DBIR). This report provides the industry nuggets of information to help us better understand the threat landscape in our respective domains. For many, it is critical to understanding the tactics, techniques and procedures (TTP) bad actors are using.

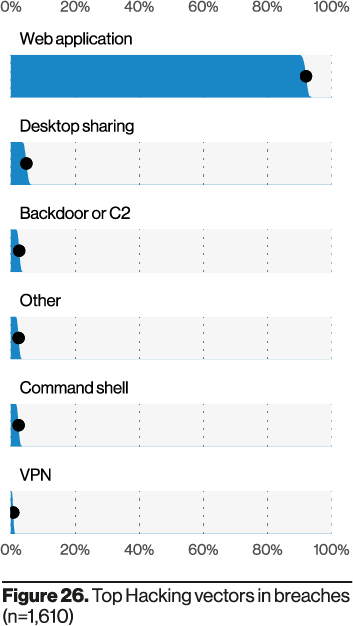

Here at NOC we pay special attention to the vectors abused for the incidents they analyzed. In 2020, the leading vector was an organizations web ecosystem (i.e., websites, web applications, endpoints, web servers).

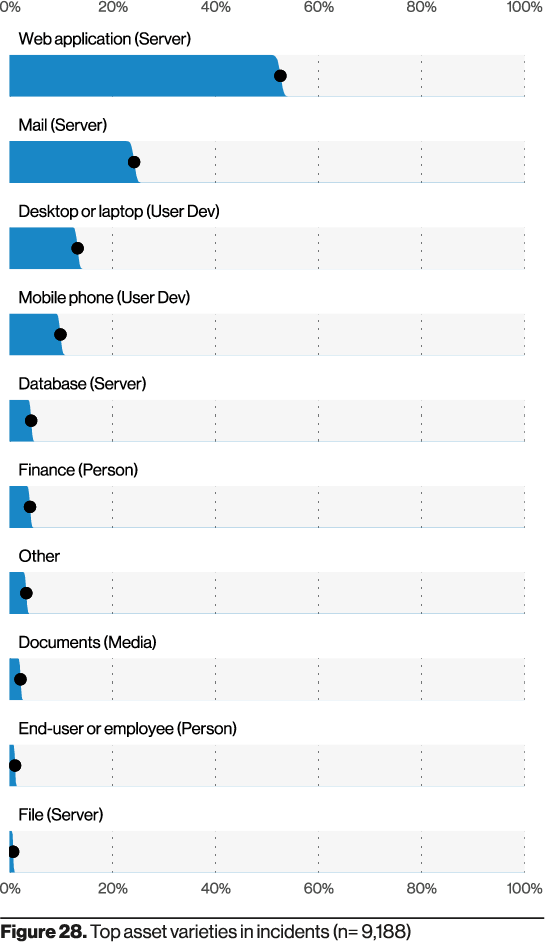

Another interesting aspect was the prevalence of servers, specifically web servers.

When you think of the leading vector, it is logical that web servers would also be the number one assets abused in the incidents they analyzed.

One could surmise that if web applications are the leading vector being abused, then naturally it must be vulnerabilities being exploited. You’d be only partially correct.

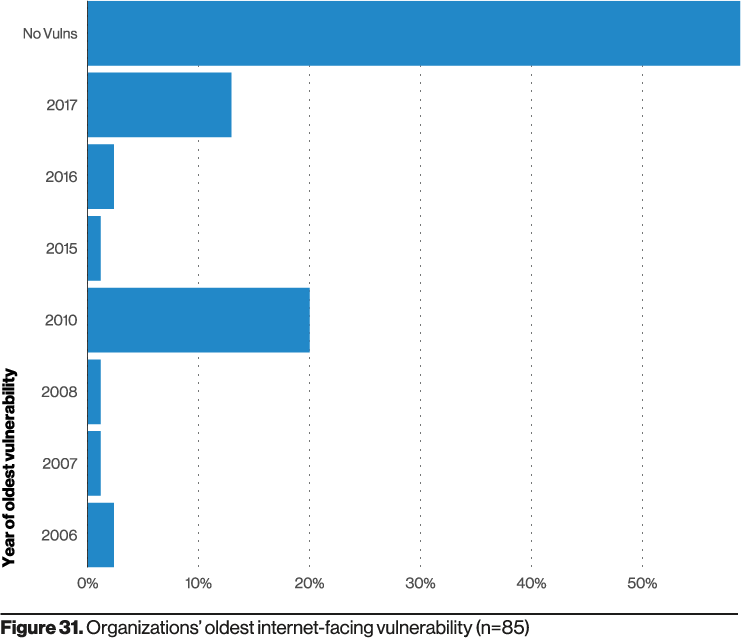

The DBIR found that of those applications leveraged as a vector over 50% had no vulnerabilities, and of those that did, the vulnerabilities were old (circa 2006 – 2017).

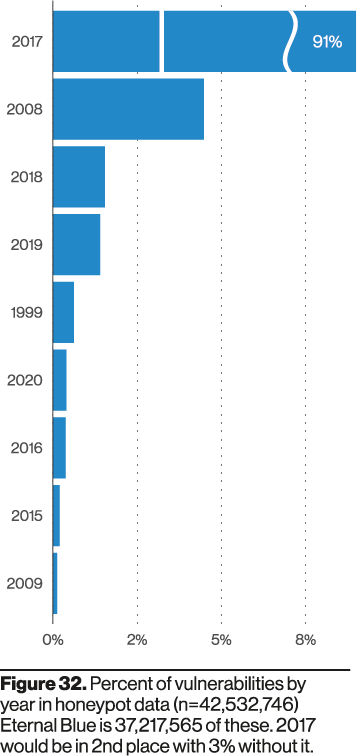

To further make their point they shared data from their honeypot in which they analyzed the percent of vulnerabilities bad actors were looking for by year:

Approximately 91% of the attempts were targeting vulnerabilities in 2017. With some attempts going back as far as 1999. This should sound familiar, we just shared similar anecdotal data in May with regards to old vulnerabilities being at the core of attempts we were seeing on the network.

These older vulnerabilities are what the attackers continue to exploit

Source: Verizon DBIR 2021

An Organizations Web Ecosystem

For those in the web application security space, these findings are not a surprise. The traditional network perimeter has long eroded, and it has placed enormous pressure on the need to make systems public-facing. It is exasperated by an organizations need for velocity, and platforms that facilitate this need are winning (e.g., the ease of use that Content Management Systems (CMS) offer). This is where more data on the types of web applications contributing to the breaches would be so helpful.

Combine this with a world that has limited funding, knowledge, and resources, and it only makes sense that bad actors have found a sweet spot exploiting old vulnerabilities that have been left unpatched. For anyone in the IT / Security world, this too should not be a surprise.

How much time is given for managing tech debt? How many assets exist that no one knows exists do to poor documentation, maintenance or oversight?

The VBDIR makes a very interesting recommendation. They talk to the importance of patching smarter, about using vulnerability prioritization to improve security, but they stop short of using technologies like Web Application Firewalls (WAF) that allow you to virtually patch and harden your web ecosystem. This is especially important in environments where the act of patching itself is one that requires oversight, testing, and approval and can take weeks, if not months. Again, this is where WAF technologies that afford Virtual Patching and Hardening can prove highly effective to securing your web ecosystem.

Knowing a vulnerability exists, doesn’t necessarily mean the organization has the means to patch it. A WAF that offers virtual Hardening and Patching helps solve that problem.

Source: Tony Perez | NOC

The report doesn’t expand on the type of web applications being exploited (e.g., web sites vs end points, etc..). It also doesn’t touch on the method of exploitation of those assets.

Fortunately, this hasn’t really changed in the past decade. While vulnerabilities are naturally at the top of the list, you’ll notice in the report 50% of the applications had no vulnerabilities. Which makes us wonder what affected those other 50%? Our experience tells us it’s highly probable that next biggest contributor was the abuse of access controls (i.e., credential stuffing).